Introduction

Context Engineering

・

AI agents only perform as well as the context they are given. Ensuring that the right context is retrieved and served at the right time is therefore crucial to getting the most out of AI-powered systems.

Introduction

In this first article, let's start by introducing the concept of Context Engineering.

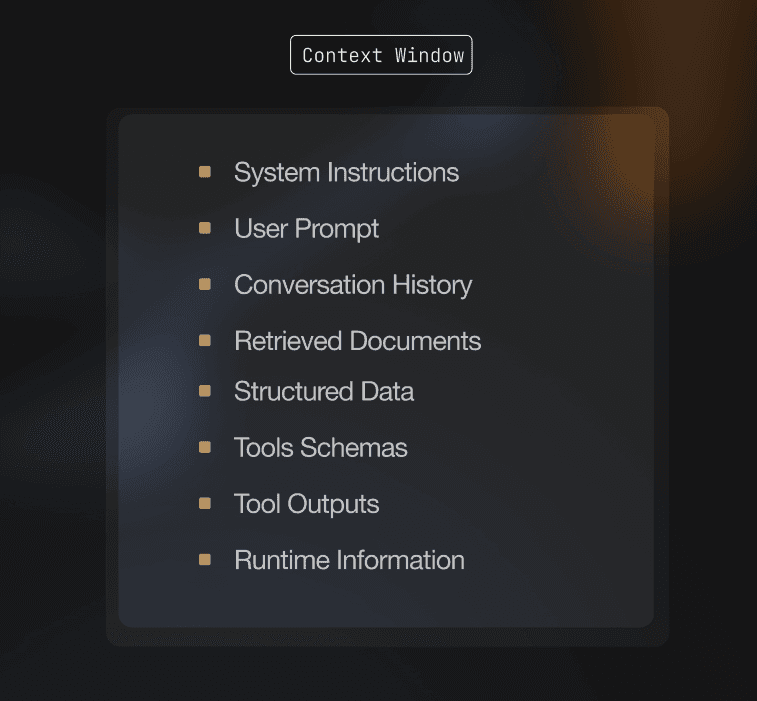

Context Engineeringis the practice of systematically designing and managing the information an AI model sees in its context window to ensure accurate and reliable outputs.

Rather than focusing solely on crafting prompts, context engineering is concerned with what data, knowledge, history, and rules are loaded into the model’s input each time it runs.

This context can include retrieved documents, database records, application data, support tickets, code snippets, recent conversation history, facts from knowledge bases, tool outputs, or any other information that sits outside the immediate prompt instructions.

The goal of context engineering is to present the model with a tailored, high-signal dataset for each operation, within the limits of the context window. In more practical terms, this means ensuring that when an AI agent is about to answer a question or execute a task, it has all the relevant information, and nothing extraneous, loaded into its short-term “memory.”

By curating the right context for each query or action, developers can significantly improve an agent’s consistency and usefulness in real-world scenarios. In essence, context engineering asks the question:

“What information should we put in front of the model, and how, to maximize the performance and reliability of its outputs?”

Context Quality

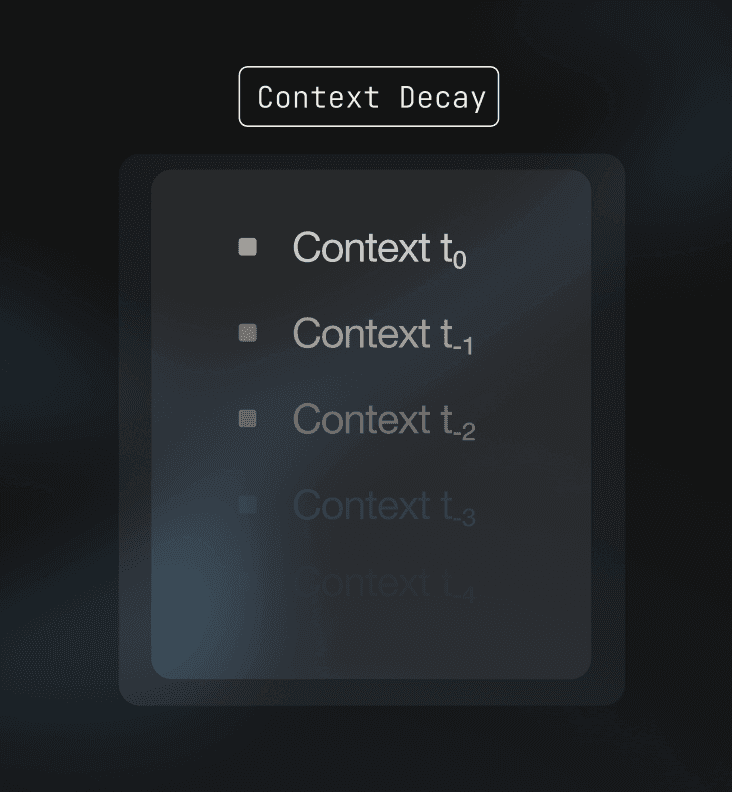

The quality of an AI agent’s outputs is directly determined by the quality of the context it receives. Large language models have powerful reasoning capabilities, but they operate within a finite context window and a limited attention budget. Every token placed into the context competes for that attention.

When context is poorly curated, models struggle. Including too much irrelevant or low-value information can cause the model to lose track of what matters most, leading to inconsistent answers or incorrect reasoning. This effect is often referred to as context rot, where adding more information actually reduces performance rather than improving it.

High-quality context, by contrast, is concise, relevant, and trustworthy. When an agent is provided with a small number of highly pertinent facts or documents, it is far more likely to produce accurate and grounded responses. Unfiltered context stuffing not only wastes computational resources, but also increases the likelihood of hallucinations. When a model cannot clearly identify the information it needs, it may attempt to fill in the gaps on its own.

Context quality also underpins consistency. Agents that operate across multiple turns or execute chains of actions depend on having the right information available each time they run. If key details are missing or outdated, the agent’s behavior can change unpredictably. Reliable agents treat context as a first-class concern rather than an afterthought.

In short, better context = better outcomes.

Looking Ahead

Context engineering is becoming a foundational discipline for building reliable AI agents. As systems grow more autonomous and operate over longer time horizons, their success increasingly depends on the quality, structure, and timeliness of the context they receive.

In the coming articles, we will explore the foundations of proper context engineering, the unique mechanics of information retrieval for AI, and what effective context management looks like in practice. We will also show how platforms like Airweave help teams implement robust context pipelines that scale from initial prototypes to full-scale production.